By: Nick Gambino

We all know the Apollo 11 mission to the moon was a success, with Neil Armstrong taking that famous first step. Well, most of I know that anyway. There are those who believe Stanley Kubrick faked the moon landing. I guess reality is a luxury and not a right.

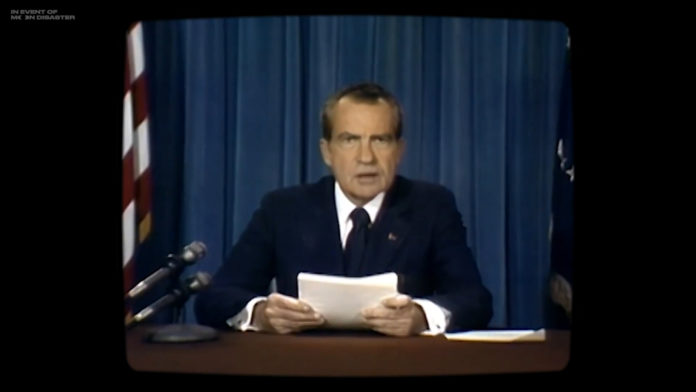

But what if the Apollo 11 mission ended in failure and our astronauts were stuck on that satellite rock, left to die? A new deepfake video produced by MIT features President Richard Nixon delivering a tragic speech announcing the failed mission. The video looks disturbingly real and shows just how far deepfake technology has advanced in just a couple years. One small step for technology, one giant horror story in the making.

The full film, In Event of Moon Disaster, starts with some archival footage edited in such a way as to look like the mission had failed. It’s then followed by Nixon delivering his morbid speech. He looks and sounds just like the Nixon we all know. The camera even zooms in for the second half of the speech just to show you how solid this forgery is.

The video relied on the use of Nixon’s resignation speech to pull it off. It includes all of his mannerisms and movements from that real video with a newly mapped face taken from an actor saying the words from the doom-and-gloom speech.

In order to get the voice just right they had to create it by recording the same actor reading hundreds of Nixon speeches. From there they were able to create an audio AI model. Once it learned the patterns of both voices, the AI was able to convert the actor’s voice into Nixon’s.

The speech itself is a real speech that can be read in the National Archives. It was written as a contingency if the mission went wrong, but for obvious reasons was never recorded.

“In Event of Moon Disaster is an immersive project inviting you into an alternative history, asking us all to consider how new technologies can bend, redirect and obfuscate the truth around us,” the website reads.

The implications of this video are scary. We’re looking at a technology that can be used to create indiscernible forgeries. The malicious applications are endless and unless we have the technology to clearly identify a deepfake, we’re in big trouble.

“It is our hope that this project will encourage the public to understand that manipulated media plays a significant role in our media landscape,” co-director of the short film, Halsey Burgund says, “and that, with further understanding and diligence, we can all reduce the likelihood of being unduly influenced by it.”